Old School techniques to hide from modern-day timelines

This post is really about knowing the limitations of your tools and the assumptions you may have about them. This post is not meant to point out good or bad in any specific tools, but to highlight the need to test and fully understand what is happening with the tools you favor so you do not make a critical assumption that haunts you later.

Many examiners today rely on timelines & super timelines to get an overall view of what is happening on affected hosts. With that in mind, lets walk through a simple scenario.

Lets imagine we have imaged a hard drive and are ready to begin our analysis. Some may load the image into their favorite forensic analysis platform and at some point sort the objects by one of four timestamps associated with an NTFS volume. Over the years, many have begun to recognize the value of looking at the (4) four additional timestamps that many forensic tools don't show you, the ones in the filename attribute. Rob Lee even did a great flow chart to see the various actions that would trigger each of the (8) eight timestamps.

Alternatively, many have opted for using timeline building tools such as log2timeline, analyzeMFT, mft2csv, etc.. etc.. to extract information from each volume's MFT (and other sources) and then display them in an overall 'view' of activity.

Lets walk through a quick example to highlight the main point of this post.

For brevity and to remove a lot of unnecessary 'noise', I will use a simple NTFS volume in this example. So simple that it only has one MFT record in addition to the default records that are created on a newly formatted volume. Here is a view of the volume in windows explorer with the single file.

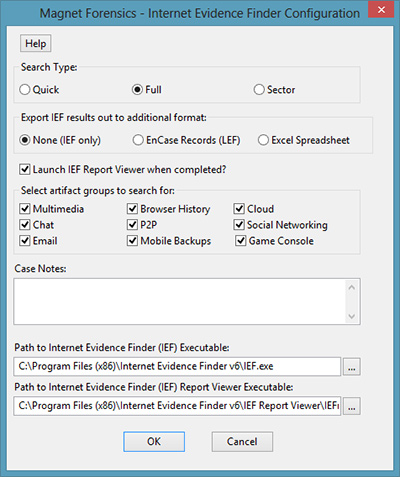

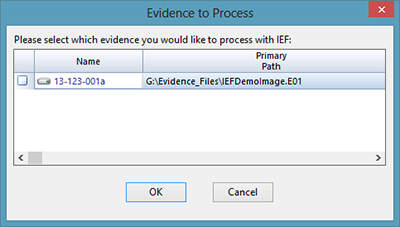

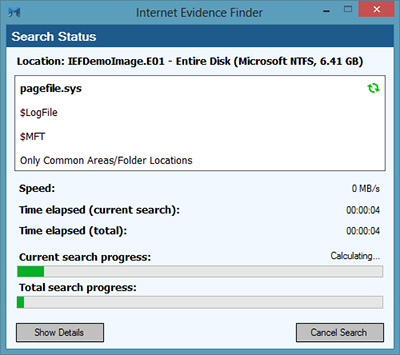

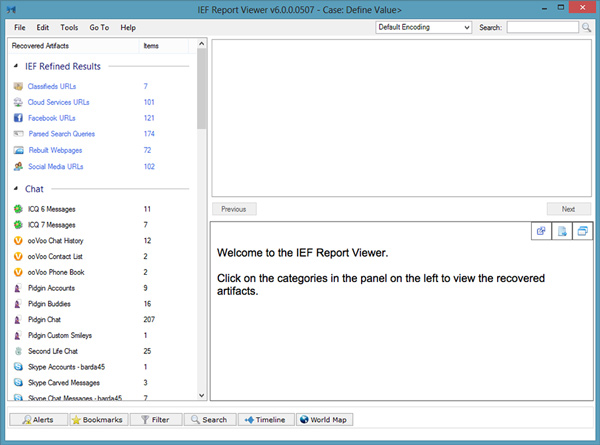

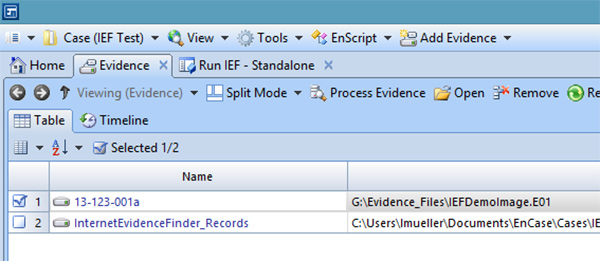

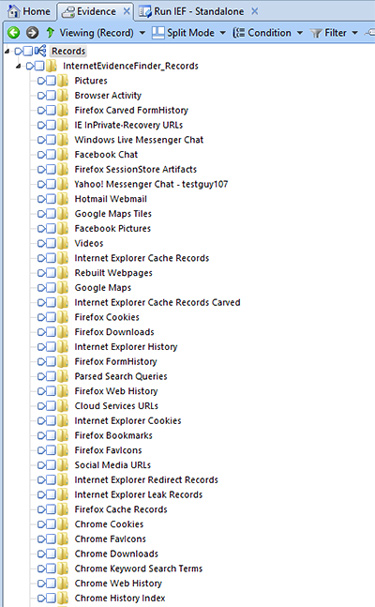

At some point, I may decide to create a timeline of this volume so I can see all of the files/folders and other time-based artifacts using a tool of my choice:

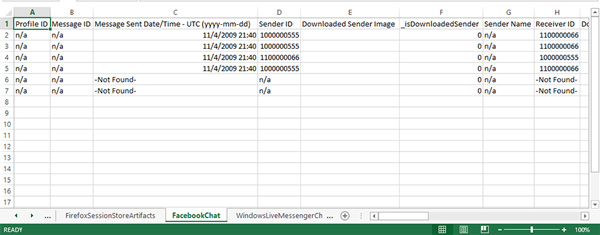

And output from another tool with all (8) eight timestamps (internal NTFS records omitted from view, but nothing else):

Looks good so far.

So, I sort all the records (in this example case there are just a few) and I don't find anything with timestamps during a time period related with the incident or investigation. Hmmm... I guess I need to look elsewhere.

What I don't know is that the attacker has used an old school trick to hide his tool and its not being displayed in my timeline.

Alternate Data Streams

Alternate Data Streams (ADS) have been a feature of NTFS since it was first introduced in Windows 2000. They are most commonly used by the operating system itself, but otherwise they are not used much from a user perspective. In fact, the Windows GUI does not even let you see these streams without third party tools.

An alternate data stream is nothing more than an additional stream of data in a single MFT record (normally, a MFT record is one entry/record for each file/folder on the volume, but in this case it is one record to many files).

An MFT record can have infinite number (confined by the limitations of a single MFT record) of streams. This means a single MFT record can have multiple files associated with it. The initial file is the controlling file and the one that creates the MFT record initially. It also is the file responsible for populating the $Standard information and $Filename attributes with the associated metadata. Delete the initial file and all the streams go away as well. Copy the file from an NTFS volume to another NTFS volume and the alternate data stream(s) also go with it.

Earlier I said every file/folder on an NTFS volume has (8) eight time stamps, but that's not entirely accurate.

Data in an alternate data stream is a called "named stream" and it is stored entirely within the $Data attribute of an MFT record, therefore it does not have its own $Standard Information attribute or $Filename attribute. The alternate data stream piggybacks on the controlling (initial) file's SIA and FN Attributes. Therefore, any file in an ADS has no timestamps associated with the stream data itself.

What does this mean?

Well, if I put my malicious tool (putty in this example) into an ADS:

None of its original timestamps are 'transferred' or recorded in the new stream. Depending on the tool you use to create a timeline, that malicious file may not even be found/listed (as shown in the two previous examples), therefore it won't even show up in a sorted timeline.

Here are the original timestamps on the file I placed into the alternate data stream:

Notice those dates are not shown in either of the example timeline data above.

As an attacker, if I pick an existing file that has an old creation timestamp, then put my file into an ADS behind that file, I will trigger the entry modified timestamp for the original file, but that MFT record will still report the old created date, even though I just stuffed a brand new malicious file into an ADS. I don't even ever have to remove that file out of the ADS to run it. I can invoke it directly as it sits inside the ADS. How often do we pay close attention to a triggered record modified date? Is that field included in your timelines?

Would you see this 'hidden' file (alternate data stream) in a tool such as FTK, EnCase or X-ways? Absolutely, but if you sort the files by a timestamp (MACE), depending on how the tool itself deals with these additional streams, these alternate data streams go to the top (or bottom depending on sort order) since they don't have a timestamp associated with it. So, unless you are specifically looking for alternate data streams, they are easy to miss and will not appear in your chronological timeline.

This is an old school trick that does not require any special tools or programs (timestomp, setMACE), but yet it seems to work pretty well against modern analysis techniques.

Download Example MFT

Download E01 image file

ShareThis

ShareThis